AI assistants equipped with the right data sources and tools can help you find information faster, write more accurate code, and test your Airwallex integrations easily. Airwallex provides the following tools to help you supercharge your integration experience with AI.

Developer MCP server

Model Context Protocol (MCP) is an open standard that enables AI assistants to interact with external systems and data sources. It defines how coding assistants discover and invoke tools, ensuring consistent behavior across different AI models and platforms.

The Airwallex Developer MCP server connects your AI assistant to Airwallex documentation, API reference, and sandbox testing tools. The server can run locally on your machine or connect to remote endpoints, and works with popular AI assistants including Cursor, Claude Code, Gemini CLI, OpenAI Codex, Lovable, V0, and Repl.it.

For installation instructions, configuration examples, and usage details, see Developer MCP server.

Plain-text documentation

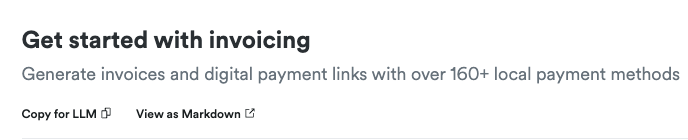

All pages in both the Airwallex Product documentation and API reference API are available in plain text - formatted as Markdown documents optimized for AI processing. You can access these documents or copy their contents using menus made conveniently available at the top of pages and sections as shown below:

A curated index of our product documentation formatted in compliance with the llms.txt standards proposal is also available.

The plain-text content or their URLs can be shared with AI assistants to be used as context to answer your questions or power your agent-driven workflows for building Airwallex integrations.

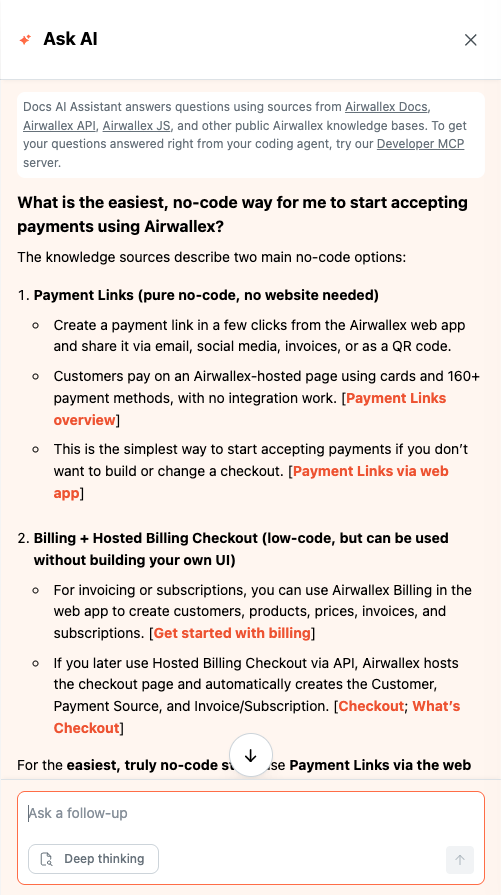

Docs AI Assistant

Docs AI Assistant is a web-based AI assistant that you can access directly from the top navigation of the documentation website:

Armed with up-to-date content from all of Airwallex's public knowledge sources, the chatbot can help you explore the capabilities of the platform, quickly get your questions answered and fast-track your integration regardless of its complexity.